Apple has announced new safeguards to prevent the spread of child sexual abuse material (CSAM). The Cupertino-based tech company has released a tool that allows you to check your iPhone for CSAM, or child sexual abuse content. Future versions of iOS 15, iPadOS 15, watchOS 8, and macOS Monterey will include these new CSAM detection features.

CSAM detection will be available in three areas: Photos, Siri and search, and Messages. Apple claims that these safeguards were created in collaboration with child safety specialists and that they protect users’ privacy.

It will be possible to detect known child abuse images saved in iCloud Photos with the new CSAM detection technology. Instead of scanning photos in the cloud, Apple states that the new tool uses an “on-device matching database of known CSAM image hashes provided by NCMEC (National Center for Missing and Exploited Children) and other child safety organizations.”

Keeping privacy in mind, Apple ensures that this database is transformed into an “unreadable set of hashes that is securely stored on users’ devices”.

Privacy in mind

This “matching process is enabled by a cryptographic technology called private set intersection, which assesses if there is a match without exposing the result,” according to Apple. The iPhone “creates a cryptographic safety voucher that encodes the match result as well as extra encrypted data about the image,” according to the document. Along with the image, this coupon is uploaded to iCloud Photos.”

To ensure that the contents of the safety vouchers “cannot be understood by Apple unless the iCloud Photos account surpasses a threshold of known CSAM content,” it is implementing another technology dubbed “threshold secret sharing.” “The cryptographic technology allows Apple to read the contents of the safety vouchers linked with the matched CSAM images only when the threshold is exceeded.” Apple clarifies.

More CSAM detection measures

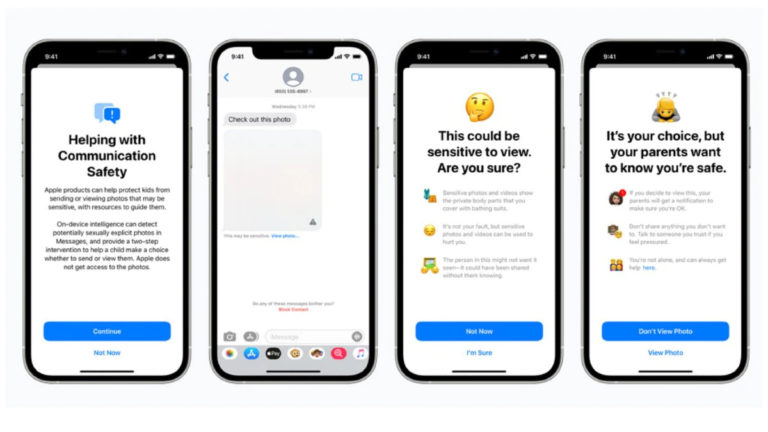

In addition, the business announced that it will introduce additional capabilities to the Messages app to alert children and their parents when they receive or send sexually inappropriate photographs. According to Apple, if the photo contains child abuse information, it will be “blurred, and the child will be alerted and presented with helpful resources, as well as reassured that it is alright if they do not wish to view this photo.” When a child accesses such child abuse content, the tool will notify parents.

Similarly, if a child tries to transmit sexually explicit images, he or she will be cautioned before sending the photo, and the parents will have the option of receiving a message if the child decides to send it. According to the business, the “feature is designed to prevent Apple from accessing the communications.”

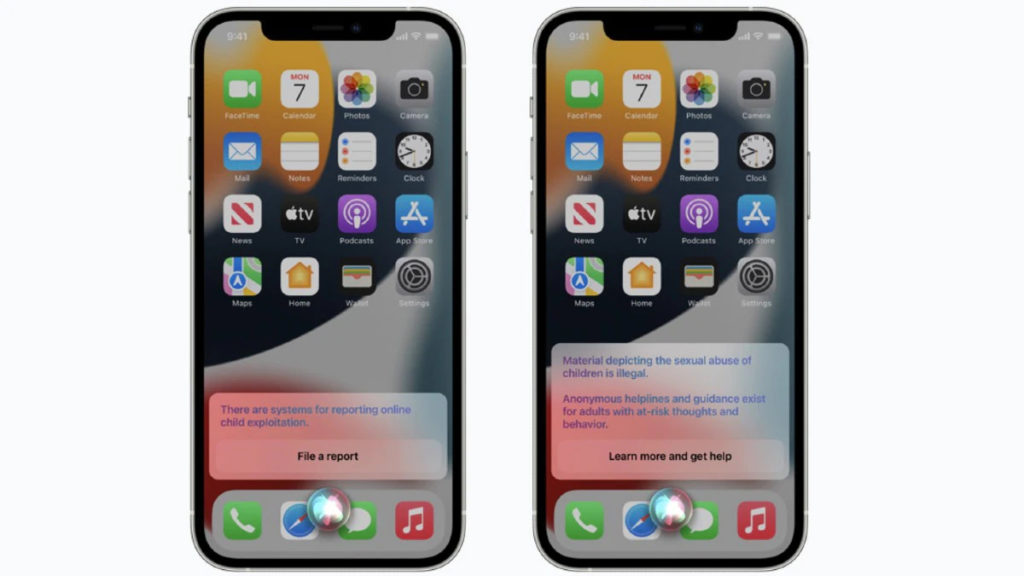

Apple is also enhancing Siri and Search recommendations by adding more tools to help kids and parents be safe online. Siri will be able to tell users how to report CSAM to the authorities. When consumers conduct searches for CSAM-related inquiries, Siri and Search will be able to act.

For the latest tech news across the world, latest Games, tips & tricks, follow Crispbot on Facebook, Twitter, Instagram.